Let me introduce you to Goio, the virtual assistant of Tenerife Tourism. The island of Tenerife is located within the archipelago of the Canary Islands (Spain), where I was born and still live, enjoying one of the best climates in the world and therefore often described as the "island of eternal spring", with an average temperature ranging between 17º-24ºC throughout the year.

Because of these conditions and other aspects such as good maritime and air connections, the economic engine of the islands is logically tourism, since we receive more than 22 million tourists a year, who can enjoy from the best beaches in Europe to see the stars in one of the best skies in the world for astronomical activities, without leaving behind the great variety of local cuisine.

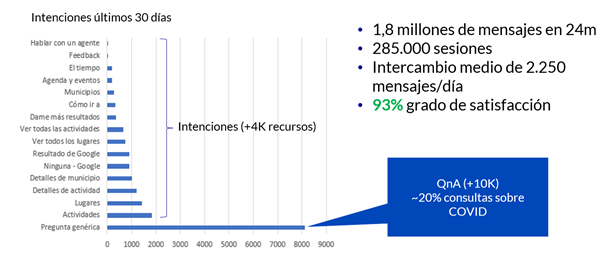

As one of the main tourist destinations within the second country with the most visitors per year in the world, creating a virtual assistant to help travelers learn about the possibilities of the island is a great responsibility. At Intelequia we knew that developing an artificial intelligence to meet these needs was going to be a big challenge, and what we didn't expect was that after its launch in 2019 it was going to become an ally when it came to informing tourists about the continuous updates and protocols during the health emergency, not only for visitors but also for residents. Nearly 20% of the queries made during the last 12 months have been to answer questions about COVID.

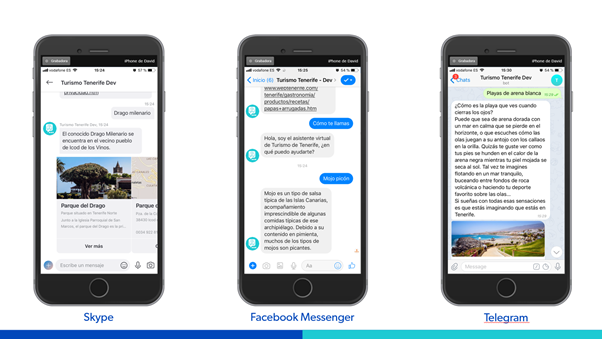

Available on various channels such as Webchat (including voice), Facebook or Telegram, it is now also available as an Alexa skill, both in Spanish and English, where it will soon also be available in French and German. After having dispatched more than 2 million messages in the last 24 months, we are happy to share that last May 20th it received the award at FITUR, the International Tourism Trade Fair, as winner in "The Chatbot Tourism Awards 2021" organized by SEGITTUR and FITUR.

How does Goio work?

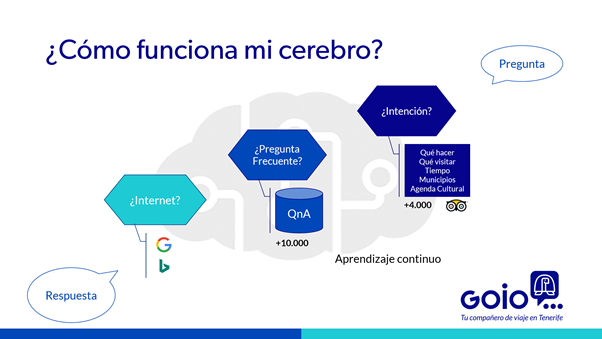

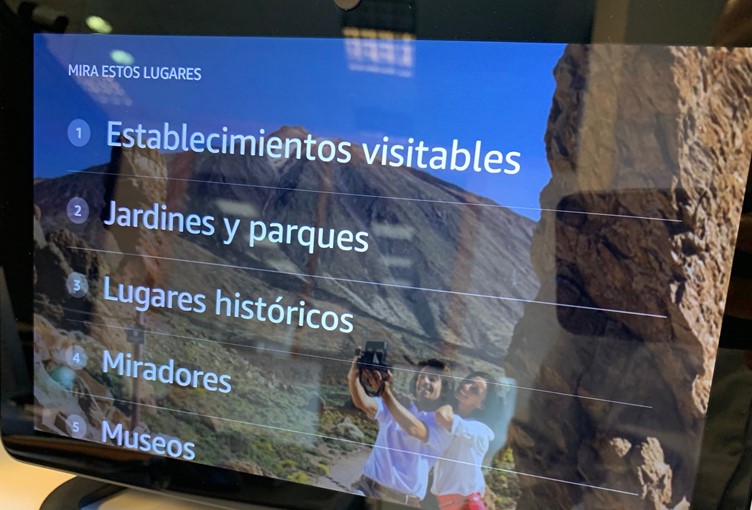

The virtual assistant is designed to satisfy queries with voice or text, based on a natural language model that is continuously being trained. When a question is asked to the virtual assistant, it tries to guess the intention of what the user is requesting from a series of "pre-trained" intentions, such as what to do on the island, which municipalities to visit, the most hidden beach or other activities to enjoy, based on more than 4,000 content resources that are integrated with the same website of Tenerife Tourism.

In addition to these resources, it is able to offer the weather forecast, the route to go from one point to another including public transport, know the cultural agenda, or get recommendations on where to eat thanks to the integration with TripAdvisor.

In the event that the question is not related to any of these intentions, it goes to a second level where an answer is sought on a knowledge database with more than 10,000 general questions where, among others, there are those related to the health emergency.

If an answer to a question is not found, a Google and Bing search is performed to present some answer to the user, so it is very rare that Goio does not know the answer to your needs as a traveler.

And in addition to doing so through standard messaging channels, it is now also available on Alexa, so you can now ask "Alexa, ask Goio what is a barraquito" or "Alexa, open Goio" to see what options are available, including Echo devices with displays.

What technologies are used?

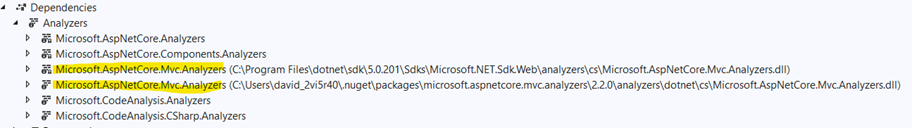

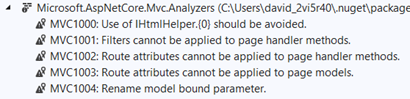

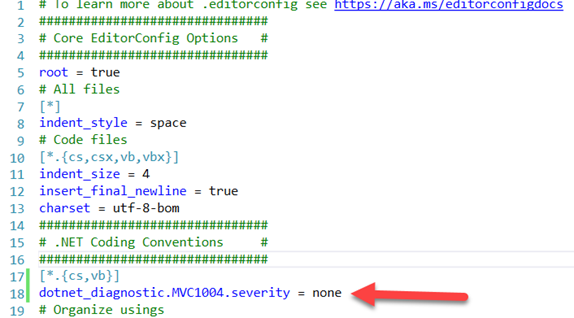

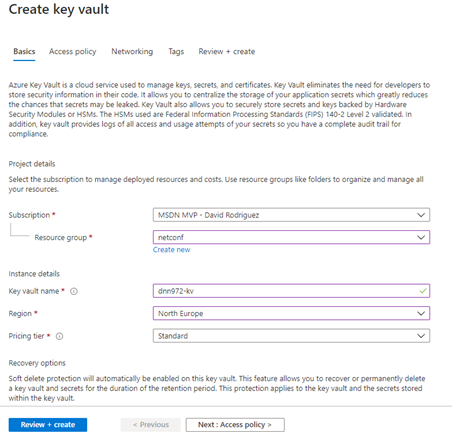

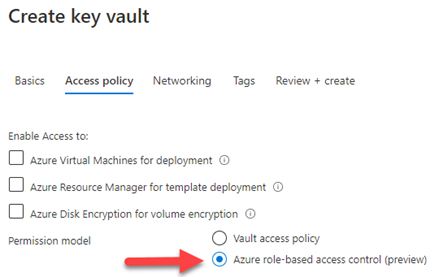

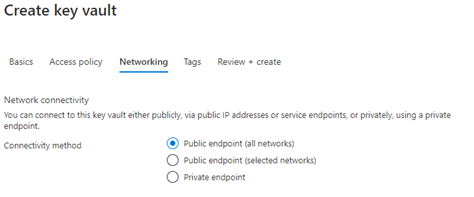

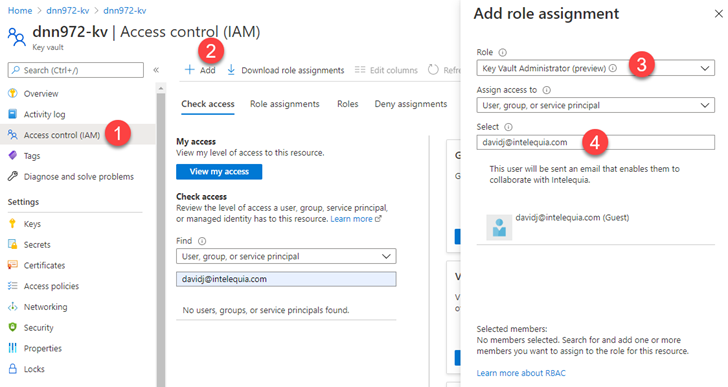

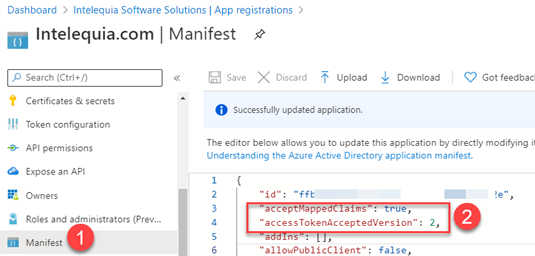

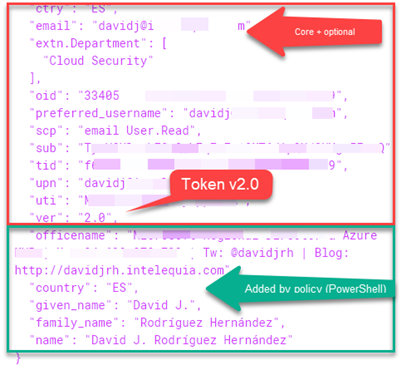

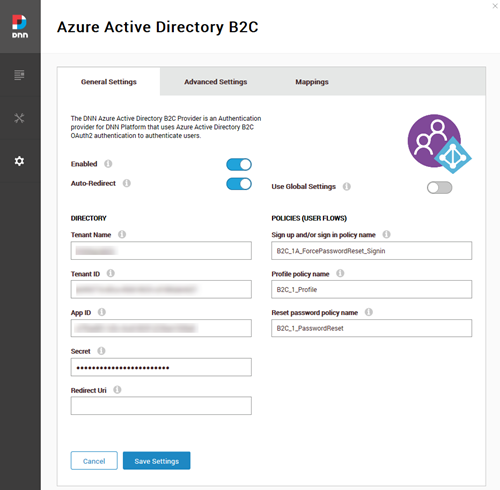

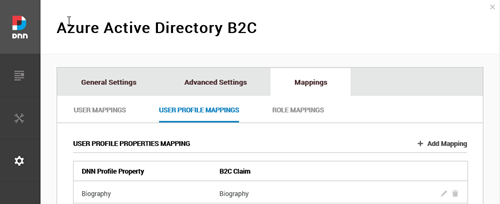

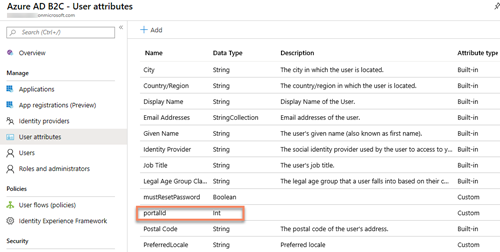

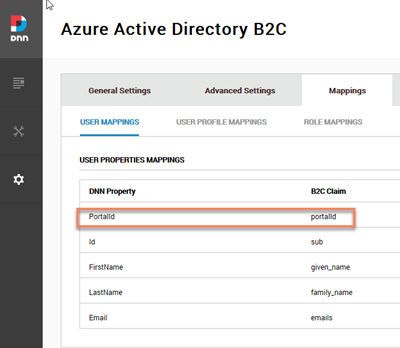

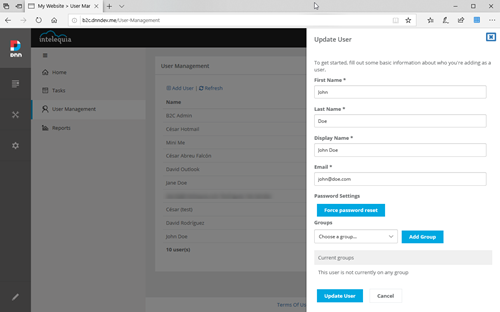

The virtual assistant is developed on the Microsoft Azure platform, through the integration of different available services. Some of these services are:

- Azure Bot Service: for the management and integration of messaging channels such as Facebook, Telegram, Webchat or Alexa.

- Cognitive Services: to guess the user's intention (LUIS); to store the knowledge database (QnA); or speech services for recognition or synthesis.

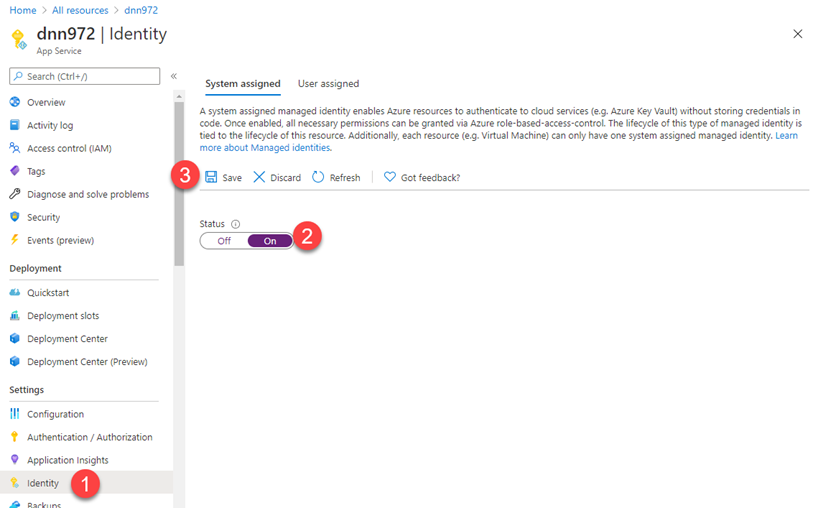

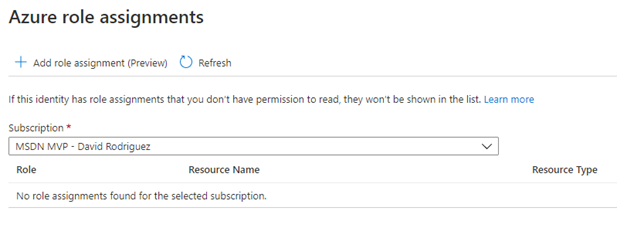

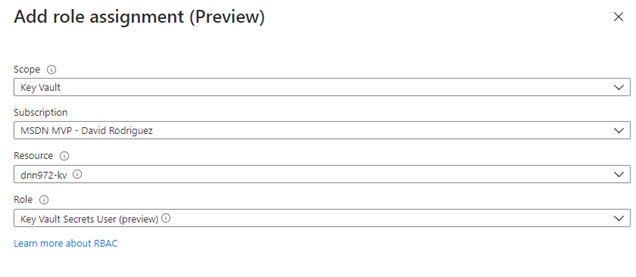

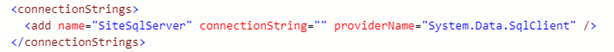

- App Service: to host the virtual assistant, developed with Microsoft Bot Framework and .NET Core.

- App Insights: to monitor conversations conducted with the virtual assistant as part of the ongoing training process

Stats

If we review the latest statistics, we have that in the last two years Goio has processed more than two million messages with nearly 300,000 user sessions, with an average of 2,250 messages per day, of which 20% of them have been COVID-related queries.

Also noteworthy is the great work done by the Infoten network to keep Goio's knowledge database up to date with relevant issues. This has led to a 60% satisfaction rate at launch in 2019 to currently reach 93%, which reflects the continuous monitoring and improvement in the assistant with the use of techniques such as Active Learning.

Don't go yet, there's more!

But that's not all. Creating artificial intelligence is an iterative process that consists of adding new skills by integrating cognitive services. In the next iterations that are already planned we have some interesting new features, such as finally having Goio through WhatsApp or in Google Assistant, as well as being able to respond in French and German.

So stay tuned for the next updates because Goio is already getting big.

More information: